In today's world, security breaches are alarmingly frequent. Despite their clear advantages, AI tools pose a growing concern for potential leaks of sensitive data. The question is rather simple: How can we take advantage of their benefits and simultaneously ensure data security? What are the possible solutions for getting there?

Your choice: build your own AI system or trust a provider

Many enterprises have generative AI pilots for code generation, text generation or visual design underway. To establish a pilot, you can take one of three routes:

- Allow: Organizations have set no restrictions, and employees use it individually. If their users have not deactivated the data protection setting, some of their sensitive information may already be sent to Open AI or other Generative AI tools.

- Invest: Organizations have already assembled a team of in-house AI experts to develop and implement in-house AI tools and practices. This is the most secure solution but also the most difficult and costly in terms of time and resources.

- Regulate: Organizations rely on solution providers such as Planisware Orchestra, which has established a framework for controlling which data types are sent out.

The “regulate” approach allows organizations to control the scope of use with a reasonable deployment time. Ultimately, this approach provides the best benefit/security ratio for limited costs. Although this approach requires an organization account for the generative AI tool, you have the added benefit of an additional layer of security put in place by the third-party supplier

What layers of data security are available with OpenAI?

As you may already be aware, Orchestra AI is based on integrating OpenAI capabilities. Concerns about OpenAI and its mass-market tool Chat GPT are based on the fact that employees use it on an individual scale. If no existing framework secures its use, the company risks data leakage, as employees' use of it and the data they communicate are neither supervised nor controlled.

OpenAI recognizes data security and usage as critical concerns for businesses. Therefore, the company has implemented measures to address these issues to enhance oversight and provide assurances. There are several solutions to deactivate ChatGPT training with user-transmitted information, aiming to prevent unauthorized data usage.

- Individual licenses: If you don’t subscribe to any offer for companies, your employees might use Chat GPT on their own, with no control. This means your data’s security solely relies on each team member individually. There is no general locking system, but each employee can disable the ChatGPT training. Here is how: Click on your account name > Settings > Data controls > disable Chat history and training. But mistakes and clumsiness can still happen—you're never completely sure that everyone disabled it. The level of security could be better, don't you agree? What solutions are there to avoid this?

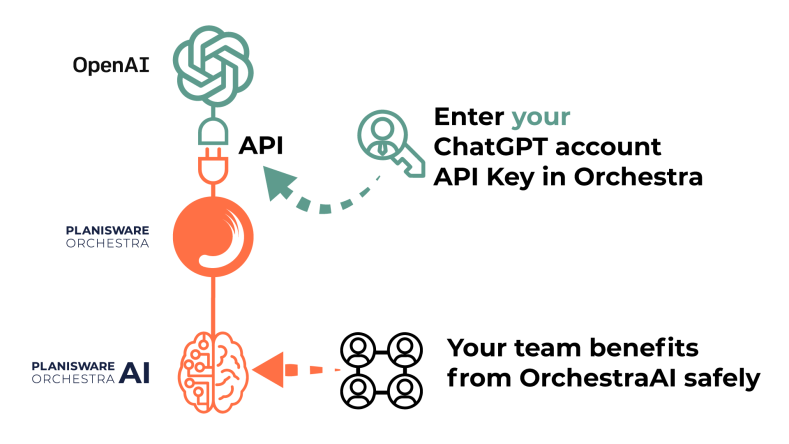

The Business Terms: OpenAI has developed several "Business" packages to give you greater control over your data. These cover two types of subscriptions to be deployed in your organization: Enterprise and Team subscriptions, which allow you as admin to deactivate ChatGPT training for all your users. The Business offer also covers the use of the ChatGPT API. Third-party providers use this API to integrate ChatGPT functionality into other software. Therefore, it is this API that Planisware Orchestra uses to give you access to OrchestraAI features. Here is how it works:

Image

Still not enough? Planisware Orchestra has also established a layer of security to give you greater control over data sent through its OrchestraAI features.

OrchestraAI framework to enhance data security

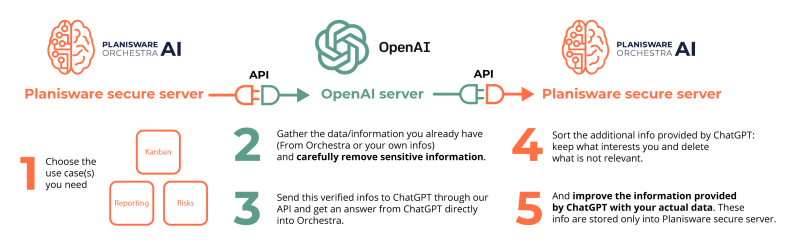

Now let's see in the diagram below how OrchestraAI addresses the security challenge in 5 steps:

As mentioned above, data transmitted to OpenAI circulates via the API and is therefore not used to train the ChatGPT model in conformity with their Business Terms. However, to increase the security of your information, we have ensured that users have the option to remove certain information sent from Orchestra that they consider too sensitive. We can never be too careful! Once you have retrieved the additional information you need from ChatGPT, you can add your own information and personalize the results. This information will only be stored on the secured Planisware server and nowhere else.

Despite this, are you still unsure about using OrchestraAI? Test it on a firmly controlled scope before opening it completely.

Control the scope of use

To provide reassurance and peace of mind when testing our use cases, we’ve fully empowered you to control the scope of use:

- Choose who is allowed to use the OrchestraAI module: User rights can be managed at the organization, user group, individual, or project type level. You define who is allowed to use it right from the start.

- Define the use cases you're interested in: choose between augmented Report Generation, Risk Management process support, and Schedule Construction. You can implement them gradually.

- Change your mind if needed: Orchestra AI can be fully or partially deactivated anytime.

With OrchestraAI, you can introduce generative AI technologies for your project management in a controlled manner while ensuring control over outgoing data.

If you need more information about OrchestraAI and its capabilities, you can consult our article: https://planisware.com/resources/product-capabilities/orchestra-ai-project-management-enhanced-ai-fast-track-your-work

You can also contact our team directly to discuss how it could most benefit your daily project management practices.